Grubhub Autocomplete

2016

Platform(s): iOS, Android, responsive web

Where: Google Play, App Store, Grubhub.com, Seamless.com

Chris Grant - Product Designer

Nirav Patel - Web Development

Galia Kaufman - iOS Development

Peter Daniels - Android Development

Mani Potnuru, Karen Lin - Product Management

Ashley Delose, Richard Chen - User Research

Tools: Sketch, Invision, Zeplin

Grubhub and Seamless have been around for years, but one core feature was missing: a search autocomplete!

I was the lead product designer for Autocomplete for Grubhub and Seamless, a much needed feature for diners as well as a personalized and contextual experience, which contributed to a 3.5% increase in overall conversion (3 times our initial goal).

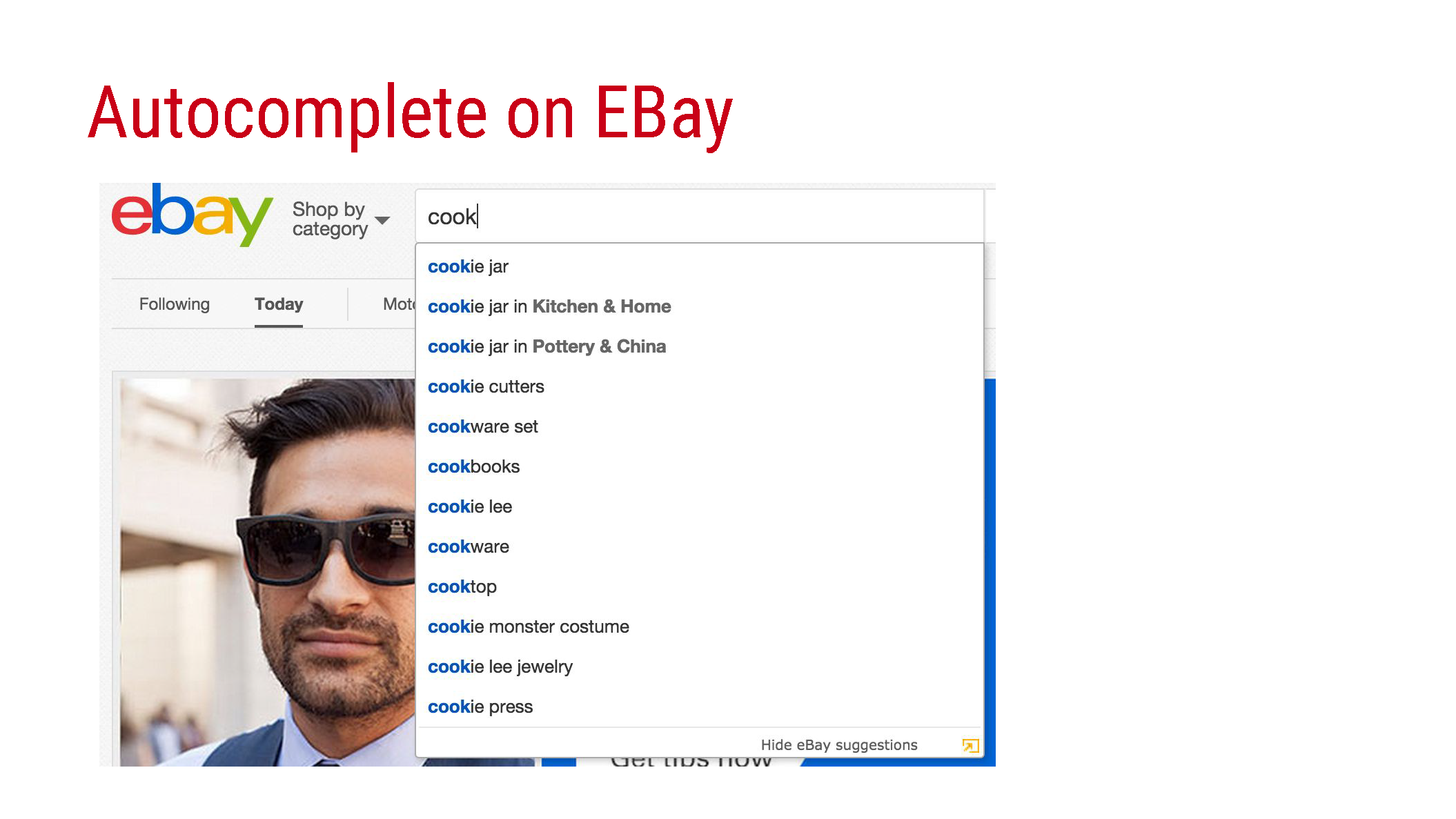

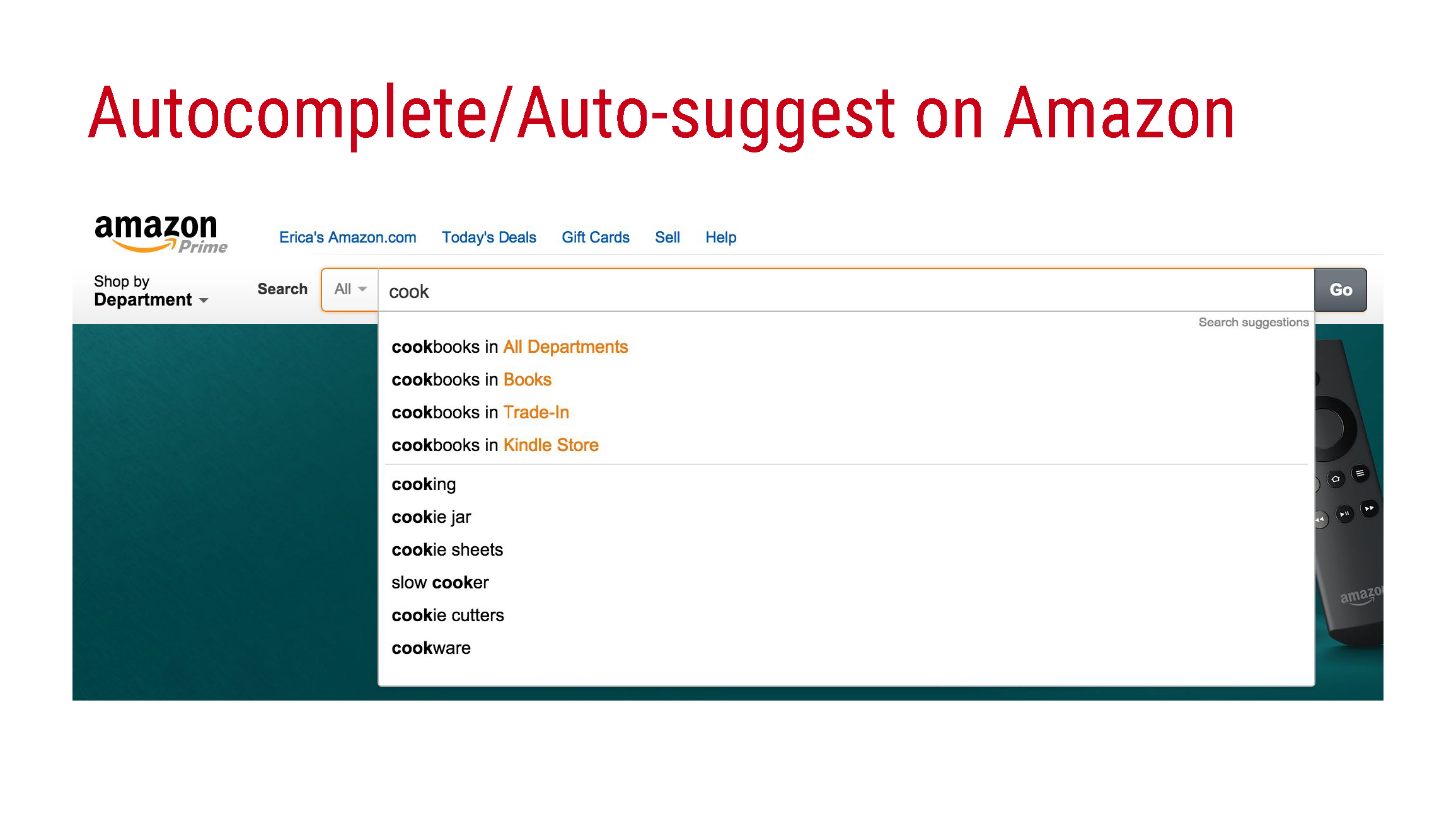

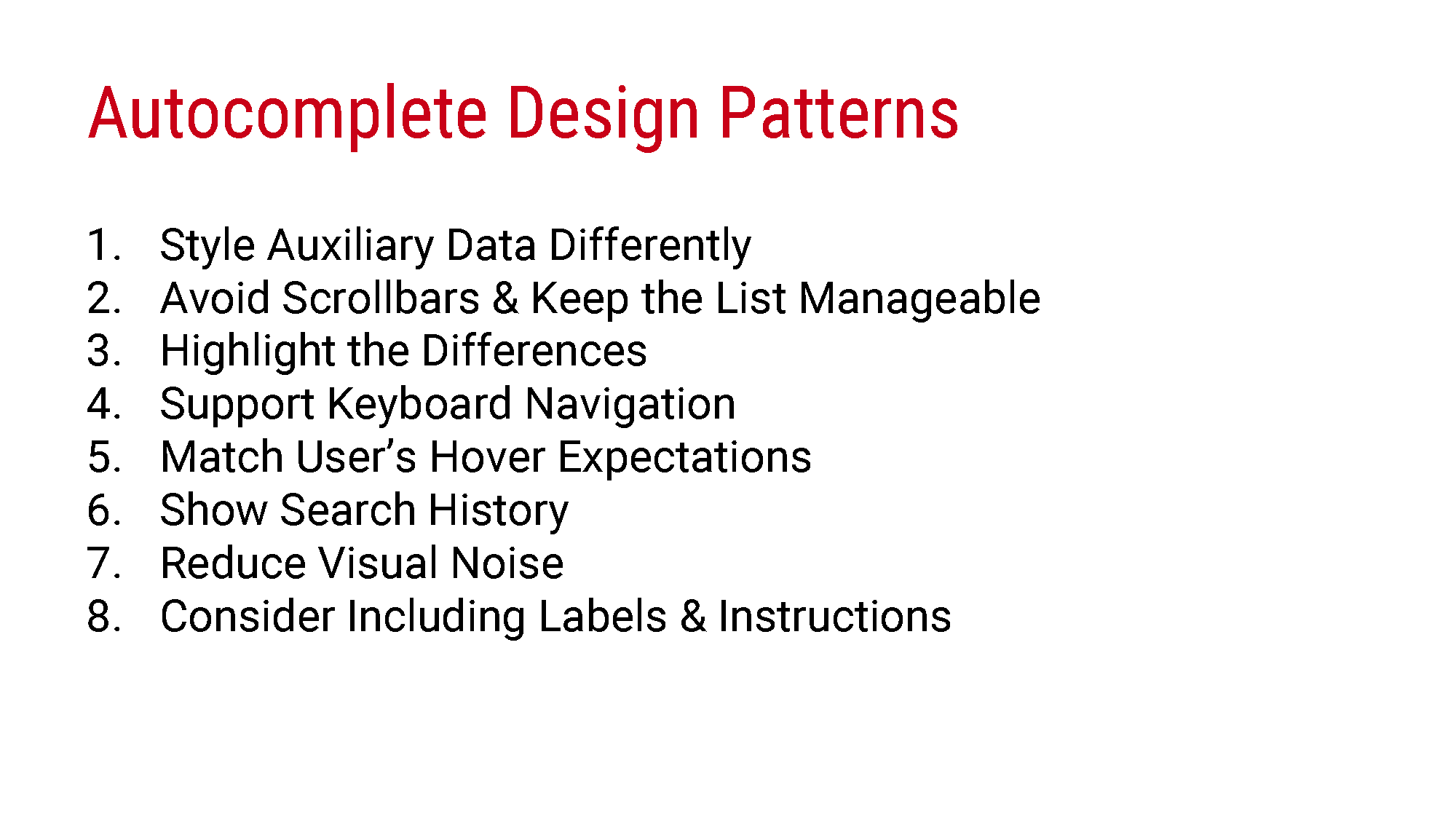

Early in the summer, during the time of our backend migration of Seamless and Grubhub systems to a new platform, we were able consider new additions to our APIs and core features, particularly around ways of conveying restaurant information to our diners — such as open status, pickup / delivery only, as well as out of delivery zone states. Autocomplete seemed to be both a means to creating an easier experience for our diners as well as providing more transparency of restaurant status. Some initial research existed around basic design patterns needs, such as maintaining manageable lists without scrollbars, having clear labels, and low visual noise. However, with new APIs and further considerations in our roadmap, we were afforded more opportunities to explore more. Along the way, we started to consider themes of personalization as well as contextually aware experiences, particularly on our mobile platforms.

Overview

Prior Research

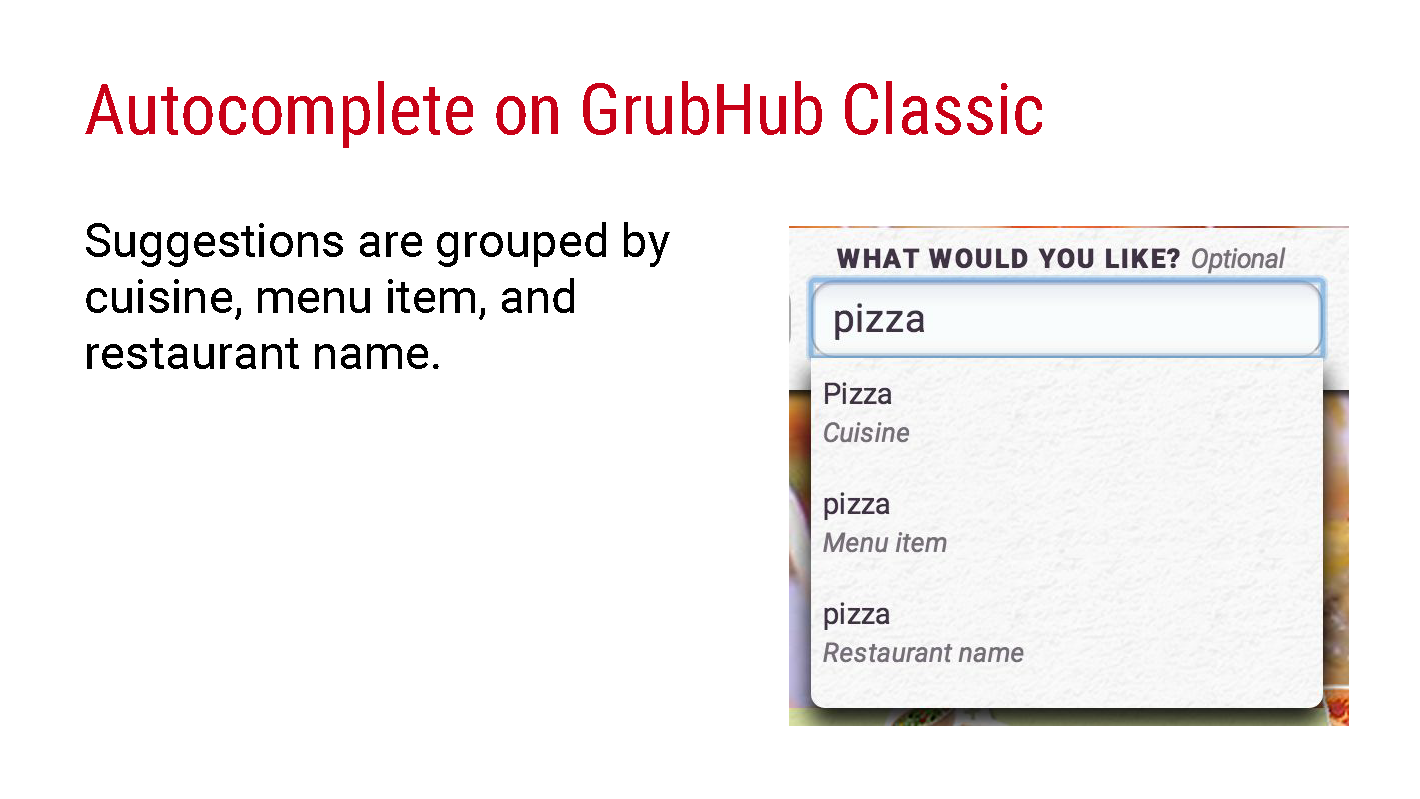

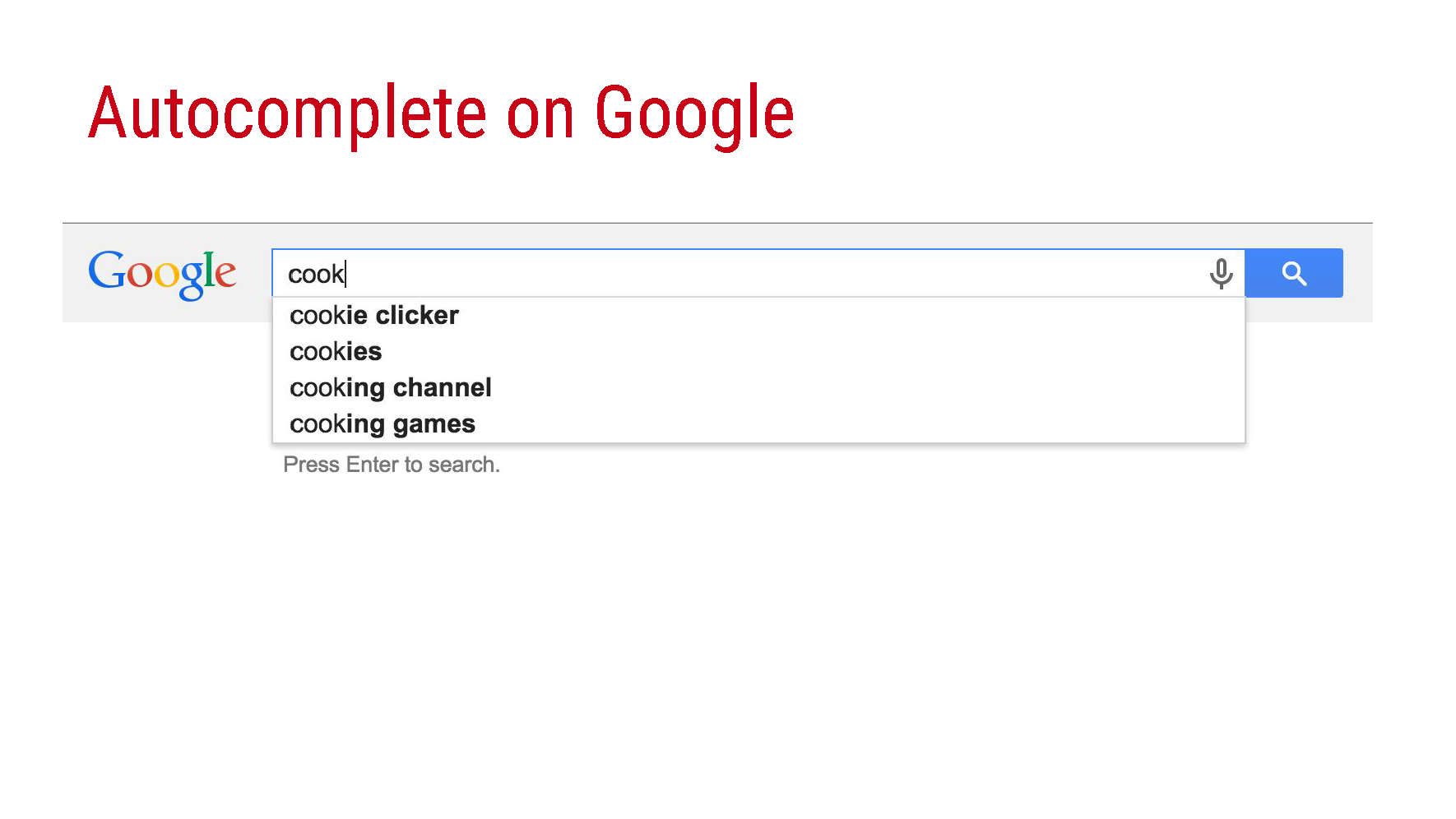

Prior explorations considered that purpose of autocomplete as a solution of recognition for problems of recall. These explorations also considered autocomplete as an aid for resolving partial queries rather than simply offering auto-suggest, the ability to search a list for related keywords, as offered in legacy Grubhub platforms. This previous research also included competitive user experience audits, which included explorations in instant/recommended results in autocomplete experience, as well as guidelines for best usability practices.

This research formed the basis of the design patterns that I would follow and observe, which included:

Styling auxiliary data differently

Avoiding scrollbars / keeping limits on the result lists

Highlighting text match and differences

Keyboard navigation support

Matching user hover expectations

Search history

Reduced visual noise

Consideration for labels and instructions

Evolution (Process + Iterative Design)

In revisiting autocomplete, we started with tests on our web platform, particularly due to our engineering resources and that this was where the APIs team was starting out.

Given the pre-existing research, the recommendations of design patterns, and the new APIs, we were curious about testing these design patterns with our new style guide, as well as testing the types of information afforded by the autocomplete APIs and their relevance to diners.

Web Platforms

A test of sectioning, color, and restaurant result types (out of delivery zone, closed)

The big questions we were looking to answer here were:

Tests to answer these questions

Do diners care to see autocomplete results that include closed restaurants and/or restaurants out of their delivery zone?

Should such results be active or disabled in view?

How many results?

Should we show certain results under certain contexts?

How do we account for restaurants that may be pickup or delivery only?

Secondary to these questions, were tests of possible visual treatments and design language, particular for the hover, disable results, labels, and sections.

From user research and usability testing, we were able to conclude that diners care to see open and closed restaurants, that diners didn’t care to see if restaurants were pickup only or delivery only, that diners were able to notice labels without much color contrast, how many results diners were please with, and which visual treatments diners were able to notice as far as labels and sections, as well as hover and disabled states.

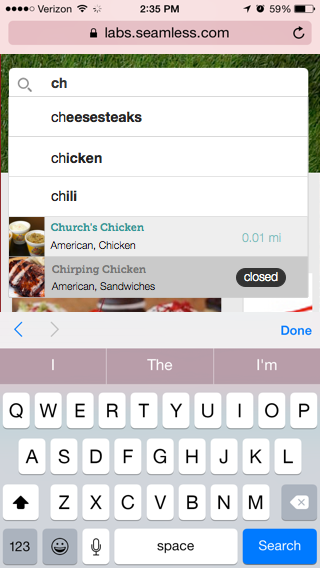

With these findings and the collective learnings from the design iterations, I was able to reach a v1 of autocomplete for responsive web experiences. This v1 autocompleting for terms and restaurants, labeling to indicate closed restaurants, and defined ratios and limits on results to not incur scrolling or going beyond the view or fold.

As our APIs got more sophisticated and we got more informed on data through a/b testing, we were able to bring up improvements in our v2, which include distance, and a bigger threshold of the result limit.

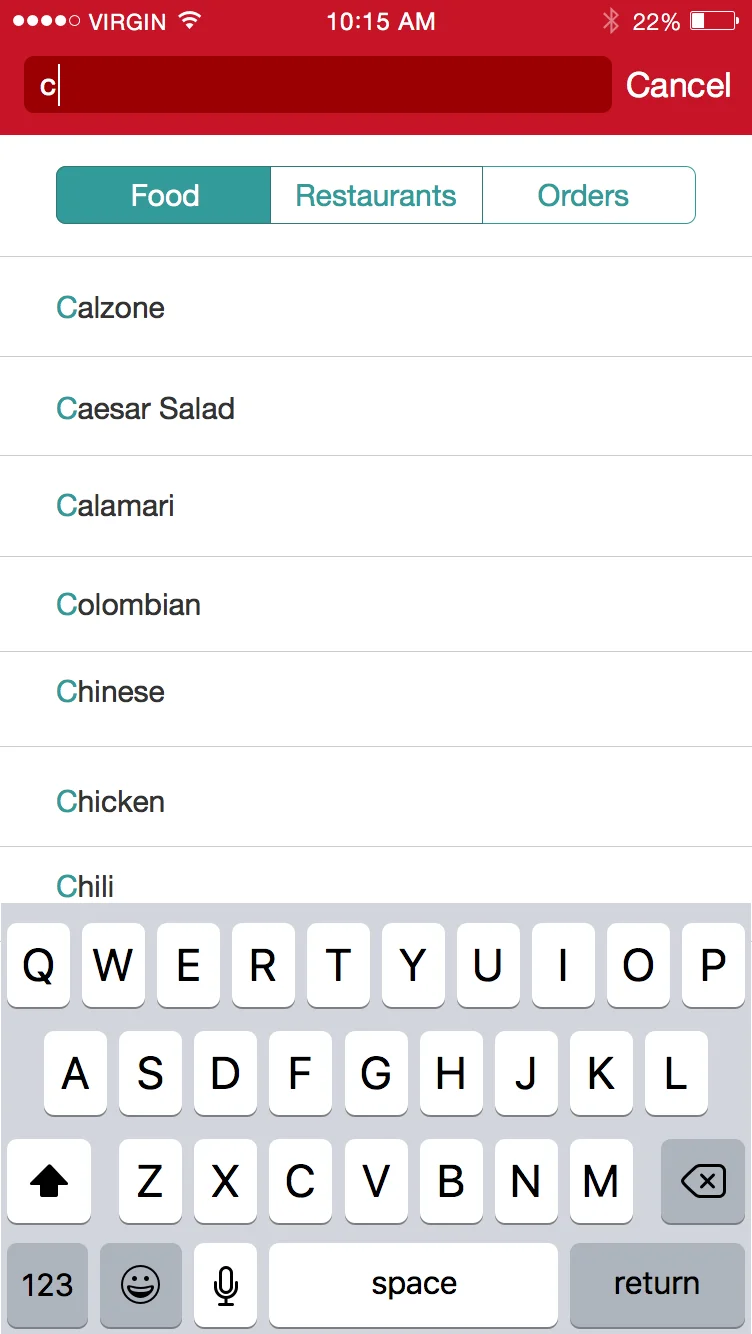

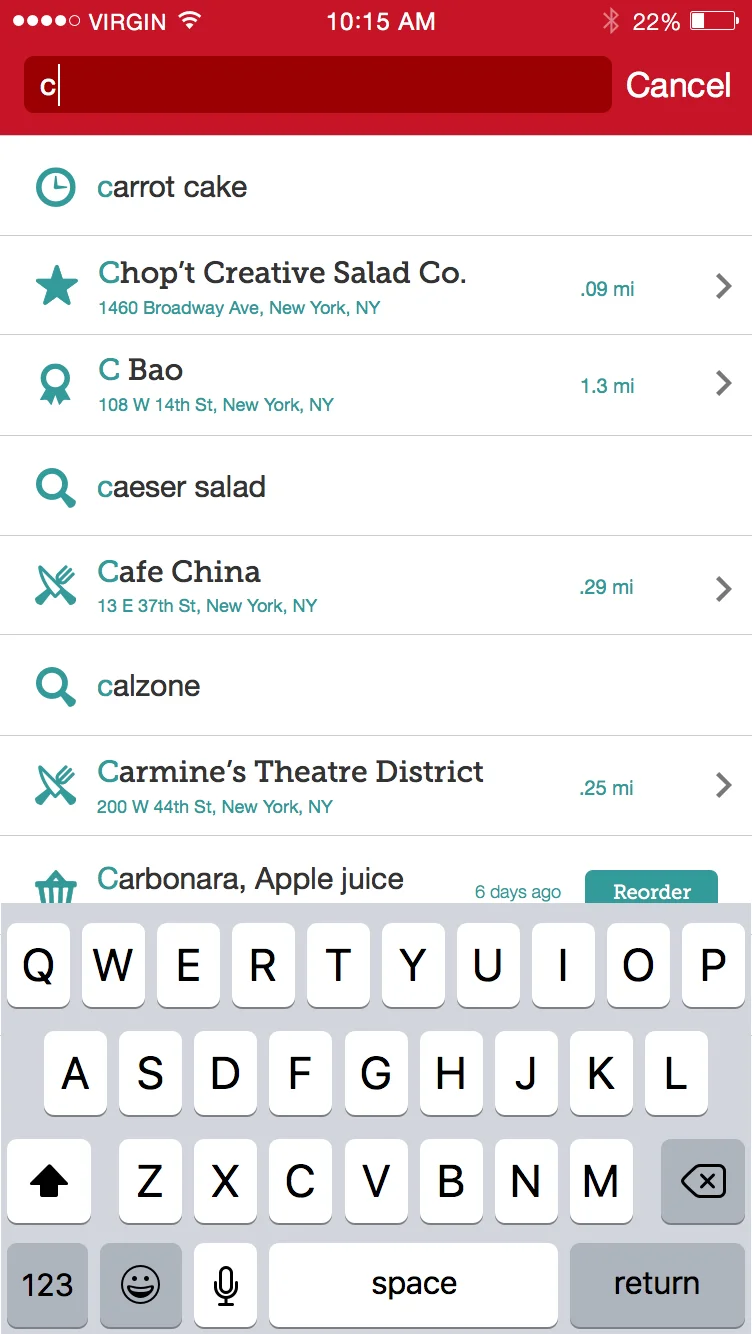

In starting the path of autocomplete for our mobile apps, we took a step back to focus on a very mobile specific strategy. For some time, we were noticing that our diners were becoming avid mobile users. During the desktop autocomplete testing, about a third of Seamless and Grubhub diners were mobile users. As we were approaching autocomplete for the mobile platforms, that number surpassed fifty percent. Designing autocomplete for mobile apps presented an opportunity to use mobile specific contexts to create a more personalized experience that could gracefully degrade rather than just a mobile port of the web experience. Given the fidelity of the APIs and our close work the APIs team, we had flexibility and the opportunity to improve our infrastructure.

Mobile Apps

Pre-kickoff

When we conducted a pre-kickoff for Autocomplete on Mobile apps, we were addressing the core problem of diners wanting suggestions for search terms and restaurants to quickly search for food. The diner feedback that we had around our existing system was that searching for restaurants was difficult and required an exact match. The recommendations from research at that point was the implementation of autocomplete and autosuggest, as well as clear distinguishing notes between restaurants, cuisines, and menu items to aid in more targeted search intents.

The big question was — given our learnings from Web, how should we proceed on mobile to make the best diner experience?

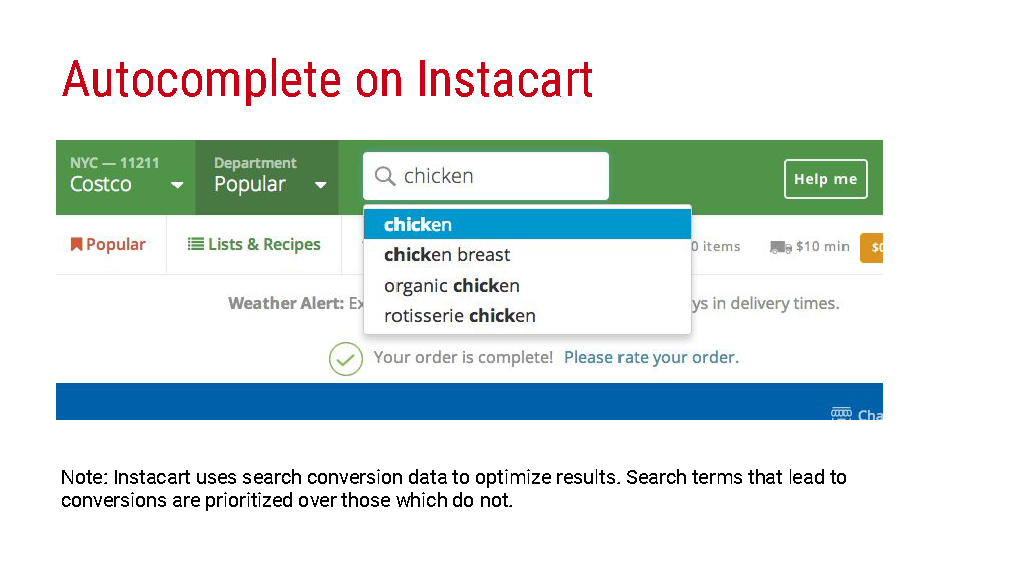

To start, I did a competitive analysis of our mobile web implementation and our main competitors, as well as user experience audits of a variety of mobile autocomplete solutions from a diverse apps and contexts.

From my competitive analysis, I noticed a pattern amongst competitor experiences consisting of location as context, context switching for matching and result previewing, imagery and icons for further context, and representations of speed (delivery estimate) or location in the experience.

In my user experience audits, I found some very interesting points. I felt encouraged to explore avenues involving showing all possible content, content the way it should be (allowing for distinct interactions), location contexts, iconography, imagery, and different ways of differentiating result types (sectionals or tabular).

![Autocomplete - a search utility [Pre-kickoff]_Page_01.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163854673-YV0S9C5UFBOCLN2VKQQ5/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_01.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_02.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163854670-5NPP7PJGTOQ1NIS5ZOYB/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_02.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_03.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163855034-RXXI5C0T7X5ZNDCYOC3K/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_03.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_04.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163855015-T3CQ1G9LQMMVNK3JRKSW/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_04.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_05.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163855221-ZB8PT0P1033Q81K2XCNM/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_05.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_06.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163855507-VM03BRY134SU6SIT3HVL/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_06.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_07.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163855587-ETB5EDFYV4ZXAWD9LRTI/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_07.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_08.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163855945-DB5Y6MCU8Q0F40H8HX8I/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_08.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_09.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163855880-BMFJ1SNLZIJH614PLRVF/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_09.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_10.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163856150-FB062H54ZH2G5EMLTUFB/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_10.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_11.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163856233-Y5YKOV03MVXXWZIJUFGW/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_11.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_12.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163856378-31BI2727HFNGWRWYR9T2/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_12.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_13.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163856474-4BWY5GKJ26MTT6WAUGNI/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_13.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_14.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163856626-54MC9QJCMY75UNP6I2WC/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_14.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_15.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163856701-UHBXQKNZI2JEI6PSETJS/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_15.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_16.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163856903-ANEERTFTWQFXZPVBLP9X/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_16.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_17.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163856950-R1QVSD05S13GZLLG9NBC/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_17.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_18.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163857173-LGQ5XK8XLMPP1090MR0W/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_18.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_19.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163857186-RQWY13USQNFYO0M4KUCK/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_19.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_20.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163857415-R12GLR903WWDVILMZKYA/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_20.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_21.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163857451-89W8WZHUCDT8ZAWZ5OUF/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_21.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_22.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163857654-YYV3VJL6UF4SAMDH84NG/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_22.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_23.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163857917-GD7NR4Z1LMRRUV4MJIUF/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_23.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_24.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163857882-TKZV4A4MAFQDHNSAYCB5/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_24.png)

![Autocomplete - a search utility [Pre-kickoff]_Page_25.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1462163858075-515RF5UZKIIVQO6W3EFH/Autocomplete+-+a+search+utility+%5BPre-kickoff%5D_Page_25.png)

User Research & Testing

Following the pre-kickoff and subsequent stakeholder conversations, we felt there was a lot of opportunity in exploring the personalization narrative in the context of our autocomplete strategy.

Given the insights from my analyses in pre-kickoff, I worked in collaboration with our user research team to gain insights and perhaps answer questions around content (how personalized? what type?), views (tabular?), the significance of location contexts, and differentiation of results (iconography? sections?). These questions lead to hypotheses that we constructed around content preferences, personalization, and view specifics, which worked in tandem with my design process.

Ultimately, I arrived at two invision prototypes to test these hypotheses:

Test A

Test B

empty state as an opportunity for personalization

From testing, we found that diners identified with the content, often experienced confusion with icons, and were split between tests A and B as far as a matter of preference. Fans of test A, the tabular view, felt that items were more organized and relevant to their intended search, whereas fans of test B, felt seeing everything was more straightforward. Given the lower risk of confusion and ease of maintaining organization, we went with test A over test b for further development and design.

At Grubhub, following user testing, designers hold kickoffs, which are meetings to sort our particulars required for development and further design, with the intent of research completeness for a version of product feature or release.

I held a kickoff, with stakeholders, design, and development, including a compilation of our cohesive vision for mobile autocomplete, versioning, implementation requirements, success metrics, risks, and items for further exploration.

Post user testing, our main questions were around the timing and handling of our APIs, failure scenarios, result ratios, and surfacing restaurant availability. The kickoff allowed us to initiate conversations to address these, as well as align our engineering, product, and design efforts around a skateboard, or mvp, version of autocomplete for mobile platforms.

Kickoff

![Autocomplete - a search utility [kickoff]_Page_01.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264519252-9WO53ZBBQH870SRTX4LC/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_01.png)

![Autocomplete - a search utility [kickoff]_Page_02.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264519254-P9WU6Q6NII15EQK6DL6G/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_02.png)

![Autocomplete - a search utility [kickoff]_Page_03.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264519545-TILN944VSG22GT8JUCPG/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_03.png)

![Autocomplete - a search utility [kickoff]_Page_04.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264519460-4UGD4IG1B5O7W0G1U72F/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_04.png)

![Autocomplete - a search utility [kickoff]_Page_05.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264519613-JKBMTDLRZFIM6XZ30TZ3/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_05.png)

![Autocomplete - a search utility [kickoff]_Page_06.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264519667-MOD990G5XD2909TIX5E2/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_06.png)

![Autocomplete - a search utility [kickoff]_Page_07.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264519779-VP4CWYTOLBHROGBF693G/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_07.png)

![Autocomplete - a search utility [kickoff]_Page_08.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264519764-TLJC9R1RXTQUFD9E9BXE/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_08.png)

![Autocomplete - a search utility [kickoff]_Page_09.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264519854-SOCM8IL58W2YRGMYLJW7/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_09.png)

![Autocomplete - a search utility [kickoff]_Page_10.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264519949-TM06F4FO53S8FZXASAX0/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_10.png)

![Autocomplete - a search utility [kickoff]_Page_11.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264519944-J4SL0DJ9XDL3TORGXWXQ/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_11.png)

![Autocomplete - a search utility [kickoff]_Page_12.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264520033-PAAROCT53NJ8BKT7E22W/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_12.png)

![Autocomplete - a search utility [kickoff]_Page_13.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264520124-NFCL3DEFJXYSZMSBLS2J/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_13.png)

![Autocomplete - a search utility [kickoff]_Page_14.png](https://images.squarespace-cdn.com/content/v1/5436238de4b0193dcc7ead07/1466264520159-PMTKKPSW1HN77YYP7TL9/Autocomplete+-+a+search+utility+%5Bkickoff%5D_Page_14.png)

In preparation for our kickoff, I worked with Karen (mobile product manager), to conceive of possible versions, from skateboard to car, of autocomplete for native mobile. This exercise required product management knowledge in concert with design thinking and understanding of our users from research. Karen and I aligned these factors to consider the role autocomplete could serve in multiple contexts of features, such as future ordering and reordering, as well as reaching the most personalized experience possible.

For our skateboard, we arrived a version that would be the minimum viable acceptable experience for our diners, given our then-current technical capabilities and development time for a Q1 release — an autocomplete focused on dish terms, restaurant suggestions, and past search history for open-now only restaurants, with tabular view controls.

Versioning / Designing For The Future

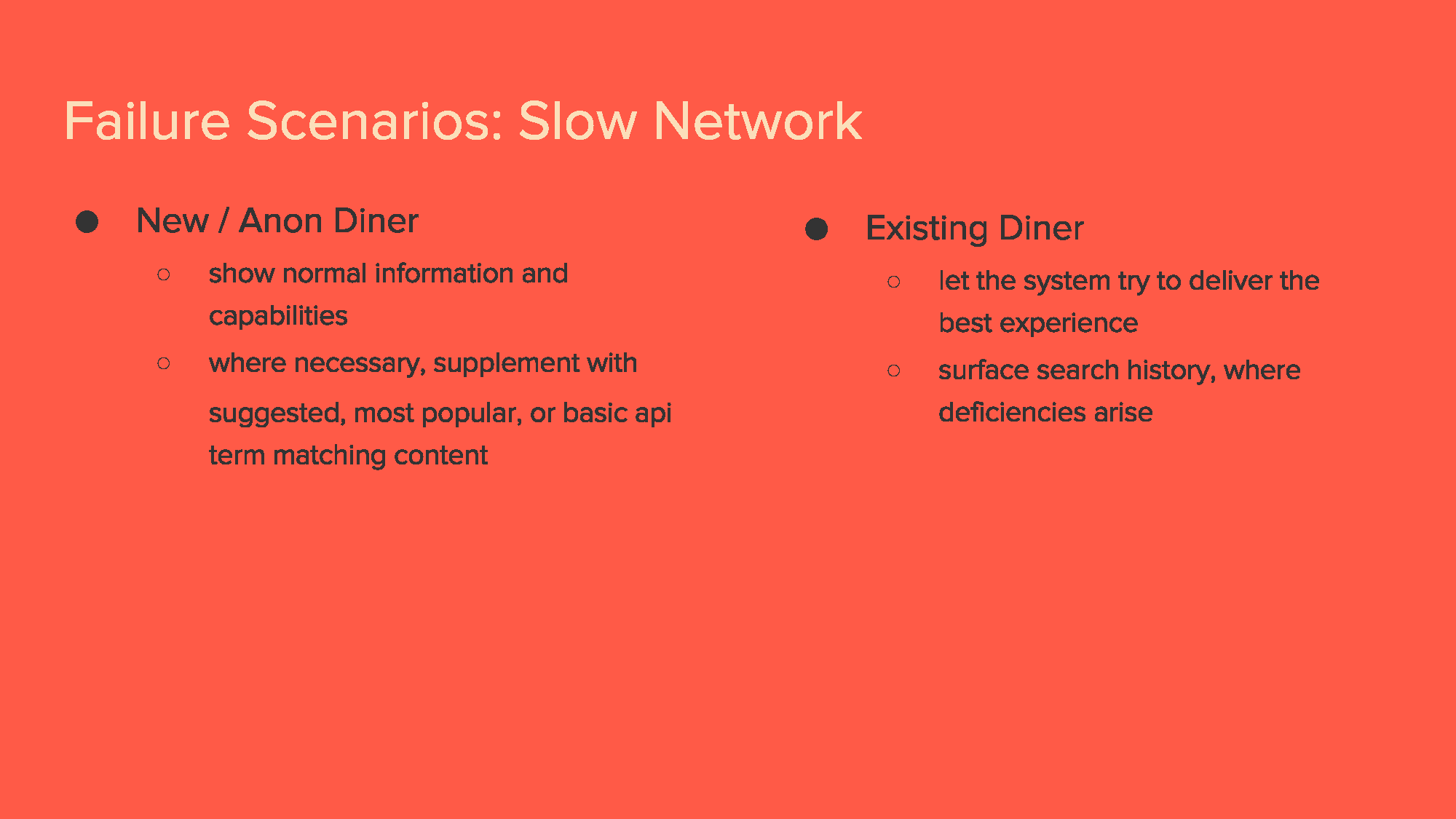

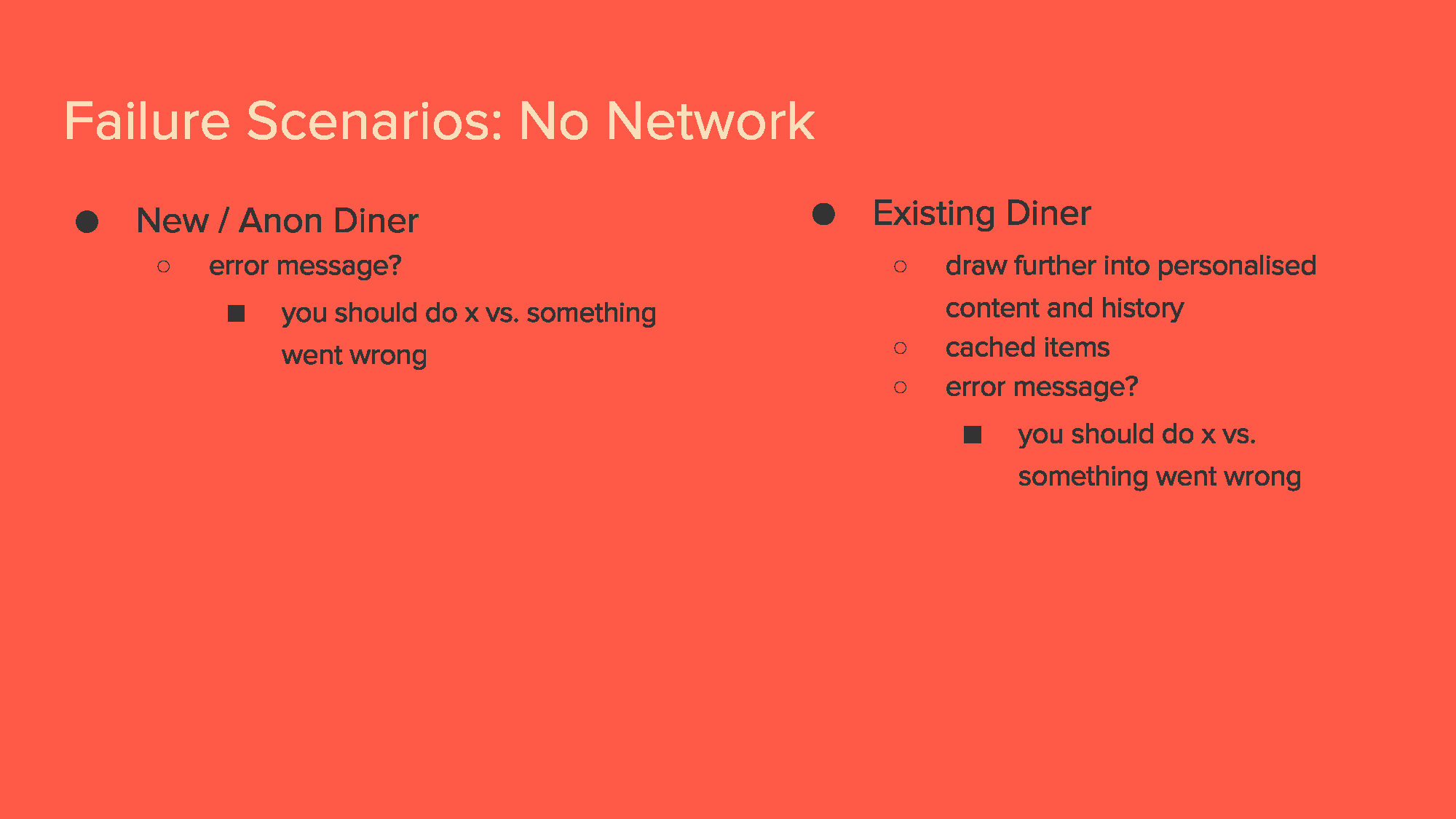

A major component that we had to think about on autocomplete for mobile was failure scenarios. Diners always want to experience something and in the worst case scenario, they want to understand that something went wrong and not feel discomfort. At the time, our apps didn’t have the best experiences for handling errors — diners would often experience empty states and/or dialog without much direction. In addressing failure scenarios for mobile autocomplete, I had to consider solutions to address failure scenarios, such as slow networks or no network, making the experience personable and gracefully degradable, as well as setting a precedent for our failure experiences.

In thinking about failure scenarios, I was designing for the cases of slow network, no network, and API failures, while considering how personal the experience would differ for new/anon diners and existing diners — such as perhaps drawing into personalized content and history.

I also arrived at the concept of an “offline mode” throughout our apps. An “offline mode” would let diners carry on as per usual, where cached or device local content may replace network dependent content to deliver a minimum experience despite network or other possible failures.

For our end, skateboard experience, we’re using the offline methodology, as well as the error handling from the graceful degradation strategy that I had designed. The benefit of this is that the diner only hits a true failure when executing the search and still has a viable experience in the worst case scenario pre-search.

Designing For Failure

At the conclusion of our kickoff, we were clear to focus on design and development of our autocomplete v1 skateboard for production. This involved me working closely and collaborating with the Android, iOS, and autocomplete APIs teams for quality assurance of test builds, addressing issues, communicating designs and interactions, considering edge cases, as well as improvements to both the experience and the APIs. The APIs side was quite an interesting collaborative effort because not only was I using the knowledge from the APIs team to design, but I was also helping the APIs team to build a better API through design thinking and consideration of user needs.

Getting v1 / Skateboard To Production

Testing interaction design nuances of platforms

Testing network failure experiences.

Our main success metrics were to increase the number of orders placed via autocomplete suggestions vs. full search, as well as search bar usage, and to decrease session time, number of clicked results per number of total displayed search results, number of viewed restaurant menus before an order is placed, and the length of search terms.

These metrics were tracked via basis point measures, where our primary goal was to increase conversion rate from regular search vs. autocomplete search for all brands and apps by 100bp.

We exceeded these expectations within the first week and went on to triple our successes in week two, via an increased conversion rate of 315bp.

Moreover, the v1 autocomplete contributed to a 3.5% increase in overall conversion. This is quite significant given that one of our company product goals for the year was to increase conversion by 5%.

We also saw an upward trend in our Apple App Store and Google Play Store ratings.

Success Metrics And Impact

One thing I would’ve loved to have done differently was increase the fidelity of our prototypes for easing both research and transition to development. Originally, I was hoping to user a high fidelity too like Origami to do this, but we couldn’t fit it into our plan nor did I have approval to stray away from Invision and our current tools. Using a tool like Origami or Framer would’ve helped to make the experience even more fluid for our test subjects, as well as lowered communication nuances regarding the interaction designs with our developers, both for QA and development rounds.

If we had the ability to, I would’ve loved for us to use a beta release program to get some more data around particulars like ideal result to term ratios, etc.

Designing mobile first and considering how experiences can be consistent and compelling across all platforms, and launching in tandem, would’ve been something that I would’ve loved us to do in hindsight, but I feel that we weren’t able to do that due to differing priorities, dependencies, and bandwidth across teams at the time.

What Would I Have Done Differently

Given the success of autocomplete’s skateboard version and current metrics suggesting potential for the empty state, we can confidently move up the steps in our version definitions.

The dependencies at the moment are our implementations of favoriting systems for restaurants and/or dishes, as well as the cases of future ordering. In any case, we could move to either our scooter, bike, or in an ideal scenario, our car version as the next step in autocomplete’s version history.